Comma Separated Values is the file format that open data advocates love to hate. Compared to JSON, CSV is clumsy; compared to XML, CSV is simplistic. Its reputation is as a tired, limited, it’s-better-than-nothing format. Not only is that reputation is undeserved, but CSV should often be your first choice when publishing data.

It’s true—CSV is tired and limited, though superior to not having data, but there’s another side to those coins. One man‘s tired is another man’s established. One man’s limited is another man’s focused. And “better than nothing” is in, fact, better than nothing, which is frequently the alternative to producing CSV.

There’s a lot about CSV that makes it a great format:

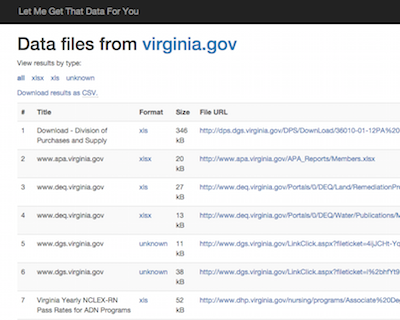

- It’s easy to produce. Many closed datasets exist as a spreadsheet on a government file server somewhere. The existence of

File → Save as CSVmakes it trivial for that data to be opened as CSV. Rendering it in a more advanced format, on the other hand, is far more difficult. - It’s easy to consume. 99% of people have no idea of what to do with an XML or JSON file. But CSV files can be read by any spreadsheet software, including dozens of free programs, even plugins that will render it within the browser, and offer niceties to make it easy to browse, search, and manipulate that data.

- It’s easy for developers to produce and consume. Any programming language can handle CSV, since it’s just an array. There are no data types to worry about, validation is easy, and there are plenty of libraries to make the work trivial.

Again, some of these strengths are weaknesses. The simplicity of the file format makes it terrible for rendering complex data, especially nested data. The lack of typing makes schemas generally impractical, and as a result validation of field contents is also generally impractical. There are vast swaths of data that can’t be reasonably represented as CSV, because they’re too complex.

For many datasets that can be represented as CSV, they should be represented as CSV. CSV lowers the barriers to both producing and consuming open data, and it’s crucial that we continue to drive down the minimum viable product for open data. So knock CSV if you must, but please also produce CSV, to make sure that your data can be used widely and easily.